Consider the internet like Pandora’s Box. The internet has become so powerful because of data and data about data.

For example, when you search the internet for something, you search for data about data. And a search proves to be useful only because someone preserved this information you were seeking somewhere over the internet. As technology has advanced to support all this data, so too has the demand for big data applications.

In earlier times, data was preserved by using simple data files. As the complexity of data grew, database management systems came into existence. Soon both structured and unstructured data were being generated at a giant level, gaining the title “BIG DATA.”

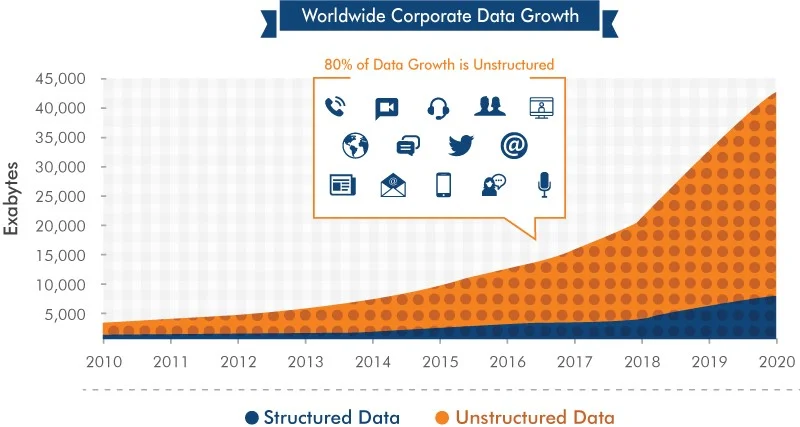

Just how big is big data? Check out this big data testing graph:

Worldwide Corporate Data Growth Benefits of Big Data Testing Source: IDC’s Digital Universe Study

This big data testing tutorial can guide you through the process of creating a big data testing strategy, discusses the best tools for big data testing and answers to your most pressing QA testing questions, including:

- What is big data software testing?

- What are big data testing challenges?

- What big data testing best practices should you follow?

- What big data testing techniques should you include in your strategy?

- What big data automation testing tools should your tech stack include?

What Is Big Data Software Testing?

Big data testing is the process of data QA testing big data applications. Since big data is a collection of large datasets that cannot be processed using traditional computing techniques, traditional data testing methods do not apply to big data. This means your big data testing strategy should include big data testing techniques, big data testing methods and big data automation tools, such as Apache’s Hadoop. Let us first explore how to test data of gigantic size.

After reviewing multiple big data case studies, you can expect to find that successful teams include the same types of big data testing methods.

We advocate the inclusion of the following tests within your big data QA strategy:

Functional Testing

Front-end application testing provides advantages to data validation, such as being able to compare actual results produced by the front-end application against expected results as well as gaining insight into the application framework and its various components.

Performance Testing

Automation in big data allows you to test performance under different conditions, such as testing the application with different varieties and volumes of data. Performance testing big data applications is one of the most important big data testing techniques because it ensures that the components involved provide efficient storage, processing and retrieval capabilities for large data sets.

Data Ingestion Testing

Include this type of testing within your data testing methods so that you verify that all data is extracted and loaded correctly within the big data application.

Data Processing Testing

Your big data testing strategy should include tests where your data automation tools focus on how ingested data is processed as well as validate whether or not the business logic is implemented correctly by comparing output files with input files.

Data Storage Testing

With the help of big data automation testing tools, QA testers can verify the output data is correctly loaded into the warehouse by comparing output data with the warehouse data.

Data Migration Testing

This type of big data software testing follows data testing best practices whenever an application moves to a different server or with any technology change. Data migration testing validates that the migration of data from the old system to the new system experiences minimal downtime with no data loss.

Big Data Testing Challenges

When testing unstructured data, challenges are expected, especially when experience in implementing tools used in big data scenarios is less.

Heterogeneity and Incompleteness of Data

Problem: Many businesses today are storing Exabytes of data in order to conduct daily business. Testers must audit this voluminous data to confirm its accuracy and relevance for the business. Manual testing this level of data, even with hundreds of QA testers, is impossible.

Solution: Automation in big data is essential to your big data testing strategy. In fact, data automation tools are designed to review the validity of this volume of data. Make sure to assign QA engineers skilled in creating and executing automated tests for big data applications.

High Scalability

Problem: A significant increase in workload volume can drastically impact database accessibility, processing and networking for the big data application. Even though big data applications are designed to handle enormous amounts of data, it may not be able to handle immense workload demands.

Solution: Your data testing methods should include the following testing approaches:

Clustering Technique: Distribute large amounts of data equally among all nodes of a cluster. These large data files can then be easily split into different chunks and stored in different nodes of a cluster. By replicating file chunks and storing within different nodes, machine dependency is reduced.

Data Partitioning: This automation in big data approach is less complex and is easier to execute. Your QA testers can conduct parallelism at the CPU level through data partitioning.

Test Data Management

Problem: It’s not easy to manage test data when it’s not understood by your QA testers. Tools used in big data scenarios can only carry your team so far when it comes to migrating, processing and storing test data-that is, if your QA team doesn’t understand the components within the big data system.

Solution: First, your QA team should coordinate with both your marketing and development teams in order to understand data extraction from different resources and data filtering as well as pre and post-processing algorithms. Provide proper training to your QA engineers designated to run test cases through your big data automation tools so that test data is always properly managed.

Best Big Data Testing Tools

Your QA testers can only enjoy the advantages of big data validation when strong testing tools are in place. We recommend reviewing these highly rated big data testing tools when developing your big data testing strategy:

Hadoop

Most expert data scientists would argue that a tech stack is incomplete without this open-source framework. Hadoop can store massive amounts of various data types as well as handle innumerable tasks with top-of-class processing power. Make sure your QA engineers executing Hadoop performance testing for big data have knowledge of Java.

HPCC

Standing for High-Performance Computing Cluster, this free tool is a complete big data application solution. HPCC features a highly scalable supercomputing platform with an architecture that provides high performance in testing by supporting data parallelism, pipeline parallelism and system parallelism. Ensure your QA engineers understand C++ and ECL programming language.

Cloudera

Often referred to as CDH (Cloudera Distribution for Hadoop), Cloudera is an ideal testing tool for enterprise-level deployments of technology. This open source tool offers a free platform distribution that includes Apache Hadoop, Apache Impala, and Apache Spark. Cloudera is easy to implement, offers high security and governance, and allows teams to gather, process, administer, manage and distribute limitless amounts of data.

Cassandra

Big industry players choose Cassandra for its big data testing. This free, open source tool features a high-performing, distributed database designed to handle massive amounts of data on commodity servers. Cassandra offers automation replication, linear scalability and no single point of failure, making it one of the most reliable tools for big data testing.

Storm

This free, open source testing tool supports real-time processing of unstructured data sets and is compatible with any programming language. Storm is reliable at scale, fault-proof and guarantees the processing of any level of data. This cross-platform tool offers multiple use cases, including log processing, real-time analytics, machine learning and continuous computation.

Benefits of Big Data Testing:

Big data testing is designed to locate qualitative, accurate and intact data and the application can only improve once confirming the data collected from different sources and channels functions as expected.

What additional benefits of big data testing are in store for your team? Here are some advantages that come to mind:

Data Accuracy

Every organization strives for accurate data for business planning, forecasting and decision-making. This data needs to be validated for its correctness in any big data application. This validation process should confirm that:

- the data injection process is error-free

- complete and correct data is loaded to the big data framework

- the data process validation is working properly based on the designed logic

- the data output in the data access tools is accurate as per the requirement

Cost-Effective Storage

Behind every big data application, there are multiple machines that are used to store the data injected from different servers into the big data framework. Every data requires storage-and storage doesn’t come cheap. That’s why it’s important to thoroughly validate if the injected data is properly stored in different nodes based on the configuration, such as data replication factor and data block size.

Keep in mind that any data that is not well structured or in bad shape requires more storage. Once that data is tested and is structured, the less storage it consumes, thus ultimately becoming more cost-effective.

Effective Decision-Making and Business Strategy

Accurate data is the pillar for crucial business decisions. When the right data goes in the hands of genuine people, it becomes a positive feature. It helps in analyzing all kinds of risks and only the data that contribute to the decision-making process comes into the picture, and ultimately becomes a great aid to make sound decisions.

Right Data at the Right Time

Big data framework consists of multiple components. Any component can lead to bad performance in data loading or processing. No matter how accurate the data may be, it is of no use if it is not available at the right time. Applications that undergo load testing with different volumes and varieties of data can quickly process a large amount of data and make the information available when required.

Reduces Deficit and Boosts Profits

Indigent big data becomes a major loophole for the business as it is difficult to determine the cause and location of errors. On the other hand, accurate data improves the overall business, including the decision-making process. Testing such data isolates the useful data from the unstructured or bad data, which will enhance customer services and boost business revenue.

Next Steps for Your Big Data Testing Strategy

Performing comprehensive testing on big data requires expert knowledge in order to achieve robust results within the defined timeline and budget. You can only access the best practices for testing big data applications by making use of a dedicated team of QA experts with extensive experience in testing big data, be it an in-house team or outsourced resources.

Looking for help in your big data testing? Choose to partner with a QA services provider like AppleTech. Our team of testing experts are skilled in big data testing and can help you create a strong big data testing strategy for your big data application. Get in touch with us today.